Peter Denton

AI systems are becoming increasingly capable of pursuing sophisticated goals without human intervention. As these systems begin to be used to make economic transactions, they raise important questions for central banks, given their role overseeing money, payments, and financial stability. Leading AI researchers have highlighted the importance of retaining governance control over such systems. In response, AI safety researchers have proposed developing infrastructure to govern AI agents. This blog explores how financial infrastructure may emerge as a particularly viable governance tool, offering pragmatic, scalable, and reversible chokepoints for monitoring and controlling increasingly autonomous AI systems.

What is agentic AI and why might it be hard to govern?

Some advanced AI systems have exhibited forms of agency: planning and acting autonomously to pursue goals without continuous human oversight. While definitions of ‘agency’ are contested, Chan et al (2023) describes AI systems as agentic to the extent they exhibit four characteristics: (a) under-specification: pursuing goals without explicit instructions; (b) direct impact: acting without a human in the loop; (c) goal-directedness: acting as if it were designed for specific objectives; and (d) long-term planning: sequencing actions over time to solve complex problems.

These traits make agentic AI powerful, but also difficult to control. Unlike traditional algorithms, there may be good reason to think that agentic AI may resist being shut down, even when used as a tool. And, as modern AI systems are increasingly cloud-native, distributed across platforms and services, and capable of operating across borders and regulatory regimes, there is often no single physical ‘off-switch’.

This creates a governance challenge: how can humans retain meaningful control over agentic AI that may operate at scale?

From regulating model development to regulating post-deployment

Many current proposals to mitigate AI risk emphasise upstream control: regulating the use of computing infrastructure needed to train large models, such as advanced chips. This enables governments to control the development of the most powerful systems. For example, the EU’s AI Act and a (currently rescinded) Biden-era executive order include provisions for monitoring high-end chip usage. Computing power is a useful control point because it is detectable, excludable, quantifiable, and its supply chain is concentrated.

But downstream control (managing what pretrained models do once deployed) is likely to become similarly important, especially as increasingly advanced base models are developed. A key factor affecting the performance of already-pretrained models is ‘unhobbling’, a term used by AI researcher Leopold Aschenbrenner to describe substantial post-training improvements that enhance an AI model’s capabilities without significant extra computing power. Examples include better prompting strategies, longer input windows, or access to feedback systems to improve and tailor model performance.

One powerful form of unhobbling is access to tools, like running code or using a web browser. Like humans, AI systems may become far more capable when connected to services or software via APIs.

Financial access as a crucial post-deployment tool

One tool that may prove crucial to the development of agentic AI systems is financial access. An AI system with financial access may trade with other humans and AI systems to perform tasks at a lower cost or that it otherwise would be unable to, enabling specialisation and enhancing co-operativeness. An AI system could hire humans to complete challenging tasks (in 2023, GPT-4 hired a human via Taskrabbit to solve a CAPTCHA), buy computational resources to replicate itself, or advertise on social media to influence perceptions of AI.

Visa, Mastercard, and PayPal have all recently announced plans to integrate payments into agentic AI workflows. This suggests a near-future world where agentic AI is routinely granted limited spending power. This may yield real efficiency and consumer welfare gains. But it also introduces a new challenge: should AI agents with financial access be subject to governance protocols, and, if so, how?

Why financial infrastructure for AI governance

Financial infrastructure possesses several characteristics that make it a particularly viable mechanism for governing agentic AI. Firstly, financial activity is quantifiable, and, if financial access significantly enhances the capabilities of agentic AI, then regulating that access could serve as a powerful lever for influencing its behaviour.

Moreover, financial activity is concentrated, detectable, and excludable. In international political economy, scholars like Farrell and Newman have shown how global networks tend to concentrate around key nodes (like banks, telecommunication firms, and cloud service providers), which gain outsized influence over flows of value – including financial value. The ability to observe and block transactions (what Farrell and Newman call the ‘panopticon’ and ‘chokepoint’ effects) gives these nodes – or institutions with political authority over these nodes – the ability to enforce policy.

This logic already underpins anti-money laundering (AML), know-your-customer (KYC), and sanctions frameworks, which legally oblige major clearing banks, card networks, payments messaging infrastructure, and exchanges to monitor and restrict illegal flows. Enforcement need not be perfect – just sufficiently centralised in networks to impose adequate frictions on undesired behaviour.

The same mechanisms could be adapted to govern agentic AI. If agentic AI increasingly relies upon existing financial infrastructure (eg Visa, SWIFT, Stripe), then withdrawing access to those systems could serve as a de facto ‘kill switch’. AI systems without financial access cannot act at a meaningful scale – at least within today’s global economic system.

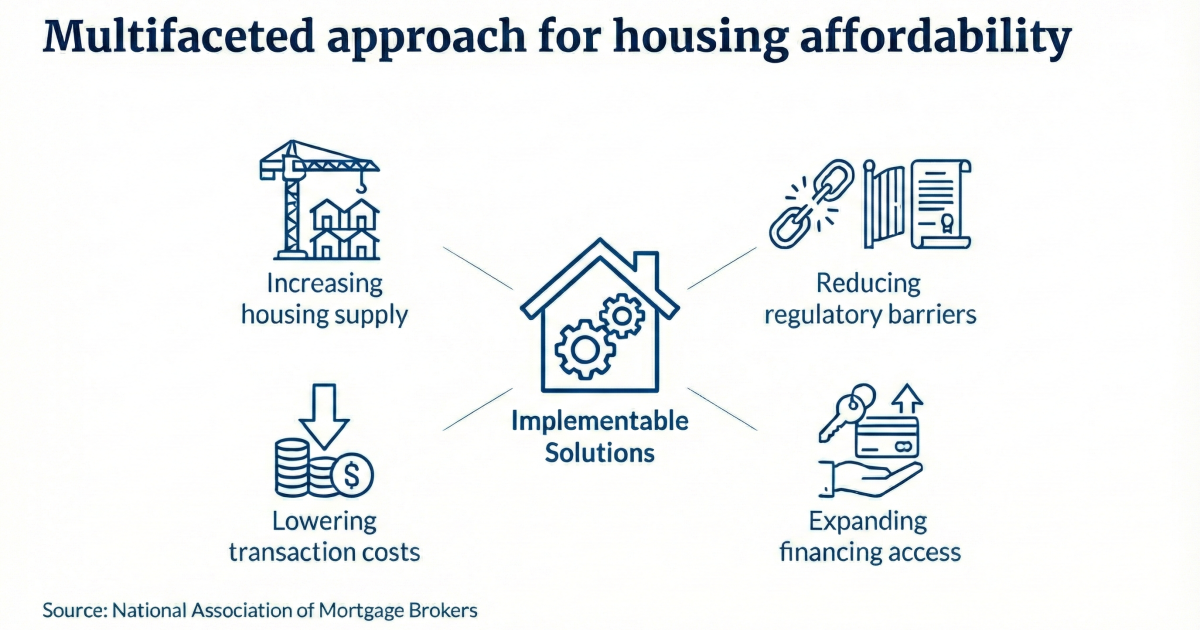

Policy tools could be used to create a two-tiered financial system, which preserves existing human autonomy over their financial affairs, while ringfencing prospective AI agents’ financial autonomy. Drawing on existing frameworks for governance infrastructure (eg Chan et al (2025)), possible regulations might include: (i) mandatory registration of agent-controlled wallets; (ii) enhanced API management; (iii) purpose-restrictions or volume/value caps on agent-controlled wallets; (iv) transaction flagging and escalation mechanisms for unusual agent-initiated activity; or (v) pre-positioned denial of service powers against agents in high-risk situations.

This approach represents a form of ‘reversible unhobbling’: a governance strategy where AI systems are granted access to tools in a controllable, revocable way. If fears about agentic AI prove overstated, such policies may be scaled back.

Authority over these governance mechanisms warrants further exploration. Pre-positioned controls in high-risk scenarios that may affect financial stability could be included within a central bank’s remit, while consumer regulators might oversee the registration of agent-controlled wallets, and novel API management standards could be embedded within industry standards. Alternatively, a new authority responsible for governing agentic AI could assume responsibility.

What about crypto?

Agentic AI could hold crypto wallets and make pseudonymous transactions beyond conventional financial chokepoints. At least at present, however, most meaningful economic activity (eg procurement and labour markets) is still intertwined with the regulated financial system. Even for AI systems using crypto, fiat on- and off-ramps remain as chokepoints. Monitoring these access points preserves governance leverage.

Moreover, a range of sociological and computational research suggests that complex systems tend to produce concentrations – independent of network purpose. Even in decentralised financial networks, key nodes (eg exchanges, stablecoin issuers) are likely to emerge as chokepoints over time.

Still, crypto’s potential for decentralisation and resilience should not be dismissed. Broadening governance may require novel solutions, such as exploring the role for decentralised identity or smart contract design to support compliance.

Beyond technocracy: the legal and philosophical challenge

As AI systems are increasingly used as delegated decision-makers, the boundary between human and agentic AI activity will blur. Misaligned agents could initiate transactions beyond a user’s authority, while adversaries may exploit loosely governed agent wallets to excel in undesirable economic activity. As one benign example of misalignment, a Washington Post journalist recently found his OpenAI ‘Operator’ agent had bypassed its safety guardrails and spent $31 on a dozen eggs (including a $3 priority fee and $3 tip), without first seeking user confirmation.

This raises both legal and philosophical questions. Who is responsible when things go wrong? And, at what point does delegation become an abdication of autonomy? Contemporary legal scholarship has discussed treating AI systems under various frameworks, including: principal-agent models, where human deployers are responsible; product liability, which may assign liability to system developers; and platform liability, which may hold platforms hosting agentic AI responsible.

Financial infrastructure designed to govern agents, then, must transparently account for the increasingly entangled philosophical and legal relationship between humans and AI. Developing evidence-seeking governance mechanisms that help us understand how agentic AI uses financial infrastructure may be a good place to start.

Conclusion

As AI systems move from passive prediction to agentic action, governance frameworks will need to evolve. While much attention currently focuses on compute limits and model alignment, financial access may become one of the most effective control levers humans have. Agent governance through financial infrastructure offers scalable, straightforward, and reversible mechanisms for limiting risky AI autonomy, without stifling innovation across as of yet to be built agent infrastructure.

According to AI governance researcher Noam Kolt, ‘computer scientists and legal scholars have the opportunity and responsibility to, together, shape the trajectory of this transformative technology’. But central bankers shouldn’t let technologists and lawyers be the only game in town. Without a physical plug to pull, the ability to monitor, audit, suspend, restrict, or deny financial activity may be valuable tools in a world of AI agents.

Peter Denton works in the Bank’s Payments Operations Division.

If you want to get in touch, please email us at [email protected] or leave a comment below.

Comments will only appear once approved by a moderator, and are only published where a full name is supplied. Bank Underground is a blog for Bank of England staff to share views that challenge – or support – prevailing policy orthodoxies. The views expressed here are those of the authors, and are not necessarily those of the Bank of England, or its policy committees.

Share the post “Could financial infrastructure be used to govern AI agents?”

Publisher: Source link